Multilayer Perceptron Experiments on Sine Wave Part 2#

In the previous experiment, we showed that an MLP with ReLU network cannot extrapolate the sine function. Recent researches showed that ReLU networks tend to extrapolate linearly and so are not fit for extrapolating periodic functions Xu, et al, 2021.

To induce periodic extrapolation bias in neural networks, Ziyin, et al, 2020 proposed a simple activation function called “Snake activation” with the form \(x + \frac{1}{a}sin^2(ax)\) where \(a\) can be treated as a constant hyperparameter or learned parameter.

We’ve experimented on the Snake activation to see if it can fit and extrapolate a simple sine function. We also experimented how Snake activation compares against alternative, but similar-looking activation functions

\(sin(ax)\)

\(sin^2(ax)\)

\(x + \frac{1}{a}sin(ax)\)

\(x + sin^2(ax)\)

\(x + \frac{1}{a}sin^2(x)\)

\(x\)

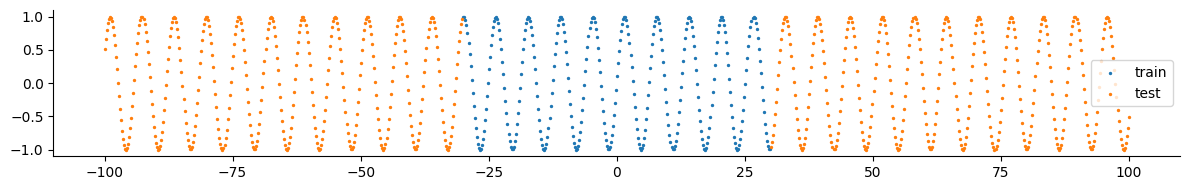

Extrapolation Experiment#

We generated synthetic data using sin(x) function, which we aim to learn. The blue colors are the training set which we will use to train our model. The orange colors are the test set which we will use to check if our model can generalize and did learn the sine function

Our inputs are the x-values (horizontal axis) and our targets are y = sin(x), which we will train our model to predict given x.

We show an animation below of the neural network parameters and the evolution of how it fits the data over its training (epochs).

In these experiments, we used

Xavier Normal initialization

Two hidden layers

256 neurons per hidden layer

learning rate = 0.0001

a = 15 for the activation functions

\(sin(ax)\)#

\(sin^2(ax)\)#

\(x + \frac{1}{a}sin(ax)\)#

\(x + sin^2(ax)\)#

\(x + \frac{1}{a}sin^2(x)\)#

\(x\)#

\(x + \frac{1}{a}sin^2(ax)\) (Snake)#

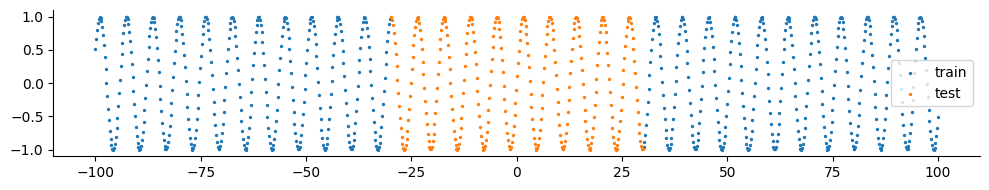

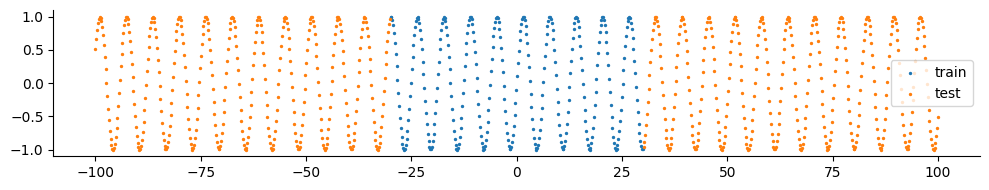

Interpolation Experiment#

Now what if we make this an interpolation problem instead of an extrapolation problem. In other words, what if we reverse the train and test set? Will the model be able to infer the sine wave in between the test data? We show below the sine wave colored by train (blue) and test (orange)

Note that we use the term “interpolate” loosely here. This is technically still an extrapolation problem since the distribution of the test set is not within the support of the distribution of the training set.

\(sin(ax)\)#

\(sin^2(ax)\)#

\(x + sin(ax)/a\)#

\(x + sin^2(ax)\)#

\(x + \frac{1}{a}sin^2(x)\)#

\(x\)#

\(x + \frac{1}{a}sin^2(ax)\) (Snake)#

How does \(a\) of Snake activation affect the model predictions?#

Take-aways#

We performed an ablation study on how the activation function learns to extrapolate a sine function from a short segment of data.

It looks like Snake activation does perform best compared to alternative, but similar-looking activation functions. The activation with the closest performance to Snake is \(x + \frac{1}{a}sin(ax)\) that doesn’t square the sine.

Snake has more trouble “interpolating” (loose definition) a missing segment in a sine function compared to Snake activation. This could be a limitation of Snake. How is it that it can extrapolate outward but not “interpolate” (or more precisely, extrapolate between training data)

Interestingly, the model that used \(x + sin^2(ax)\) and \(x + \frac{1}{a}sin^2(x)\) “collapsed” to just predicting a near-horizontal line.

This tells us that the precise formulation of Snake is important and deviations from this formula can lead to collapse or worse extrapolation.

We also found the importance of selecting the \(a\) parameter for the Snake activation. If it is too small, then the model does not learn to extrapolate well. It seems for this task, Snake is not sensitive to very high values of \(a\)